A look into the undocumented IPC mechanism.

Introduction

Buckle up, IPC is fun!

Background

Advanced Local Procedure Call(ALPC) is an undocumented IPC implementation aimed at replacing LPC for internal use within Microsoft.

It is a high speed, scalable and secured facility for passing arbitrary sized messages across processes and privilege levels.

ALPC is extremely crucial and ubiquitous in the modern Windows ecosystem.

As an example, Remote Procedure Call(RPC) uses ALPC under the hood for all local-RPC calls over the default ncalrpc transport, as well as kernel mode RPC.

All Windows processes and threads communicate with the Windows Subsystem Process(csrss.exe) on startup using ALPC.

When a process raises an unhandled exception, KernelBase!UnhandledExceptionHandler communicates with the Windows Error Reporting(WER) service through ALPC to report the crash.

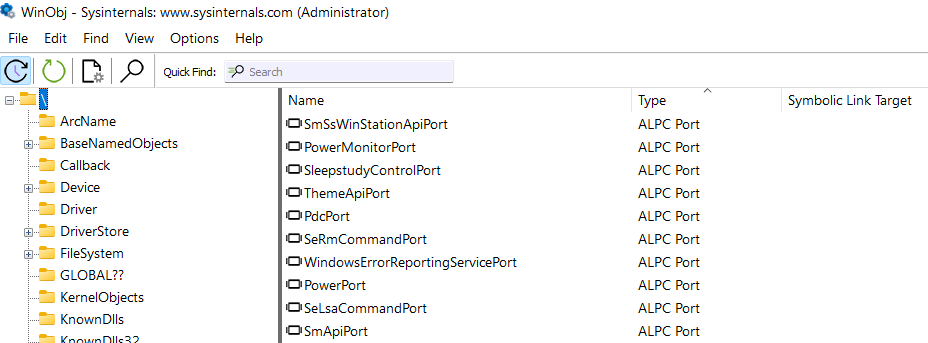

If that does not convince you, check out WinObj.

Many Windows components like the Power Monitor and WER has listening ALPC ports.

From a security point of view it’s a neat attack surface to inspect, since messages sent from usermode to these internal ALPC ports cross the privilege boundary.

Even if we assume the core implementation of ALPC to be secure, components using ALPC might contain logic bugs when dealing with incoming messages.

Communication Flow

Internally, ALPC only uses a single executive object, the ALPC_PORT object to maintain state during communication.

1 | //0x1d8 bytes (sizeof) |

This port object can represent one of the following ports:

- Server Connection Port

- Server Communication Port

- Client Communication Port

- Unconnected Communication Port

To begin with, the server calls NtAlpcCreatePort to create a named server connection port, then use NtAlpcSendWaitReceivePort to listen for incoming messages on that port.

A client can then attempt to connect via NtAlpcConnectPort.

If the server chooses to accept the connection, it calls NtAlpcAcceptConnectPort.

In doing so, the connection is established, and 2 more port objects are created. The client is given a handle to a client communication port, while the server is given a handle to a server communication port.

At this point, the client can send a message using NtAlpcSendWaitReceivePort to the client communication port, while the server continues to listen using the same API on the server connection port.

The curious reader might question the purpose of the server communication port, since the server only uses its connection port to listen for messages.

Turns out that the server connection port is merely used to identify a unique client, and also to impersonate the client when necessary. It would be quite inefficient if the server has to create a thread to listen on a different port everytime a new client connects.

How does the server then distinguish between a connection request and a message request?

It checks the PORT_MESSAGE structure(actually more of a header) that sits on top of every actual message

1 | //0x28 bytes (sizeof) |

where the type member identifies the message type.

This structure is filled up by the kernel when you actually send the message.

All in all the high level flow is similar to a regular unix socket, but of course ALPC being “advanced” supports much more customization.

Message Model

ALPC supports 2 methods of passing buffers across processes.

Double Buffer Mechanism

This is the default mechanism.

The kernel copies the buffer from source process into kernel memory, then copies buffer from kernel memory into target process.

This method is less efficient due to the presence of 2 buffers at the same time, and the buffer has a maximum size of 64KB on current Windows 11, obtainable via the AlpcMaxAllowedMessageLength API.

Section Mechanism

The message can be stored in an ALPC section object, which the two processes map views of.

An ALPC section object is like an advanced super-encapsulated-reference-counted-garbage-collected normal section object, created through the NtAlpcCreatePortSection API.

For each section, there are some security options available.

- Secure mode allows a maximum of 2 views(1 client) of the section. This is used when a server wants to share data privately with 1 client.

- Views can be marked as read-only for clients, so only the server can write.

To use this mode, the sender has to send its section handle using the ALPC_DATA_VIEW_ATTR attribute to the receiver along with the message.

More on attributes later.

Buffer Layout

As for the buffer content, both mechanisms require it to contain the PORT_MESSAGE struct before the actual message.

The actual message can contain arbitrary binary data.

Request and Response

ALPC supports 3 modes of operation.

Synchronous Request, Asynchronous Request as well as Datagram.

Synchronous Request

NtAlpcSendWaitReceivePort blocks after sending a message, until a response comes in.

The sender can choose this mode by providing ALPC_MSGFLG_SYNC_REQUEST as the second argument to the API.

Datagram

Basically UDP packets where no response is anticipated.

Non-blocking of course.

Asynchronous Request

This is where things get interesting.

How is the sender supposed to know when to read the response if the function is asynchronous?

Turns out there are 3 more modes available.

ALPC Completion List

Under this mode, it’s up to the client and server to come up with a synchronisation method as no default notifications are provided.

Each completion list entry is a glorified Memory Descriptor List(MDL)

1 | //0xa0 bytes (sizeof) |

This allows efficient copy of messages at the physical level, but also means no inherent alerts are given to the sender.

This mode also only can be used by usermode senders.

IO Completion Port

Completion ports are quite well documented at https://learn.microsoft.com/en-us/windows/win32/fileio/i-o-completion-ports and therefore provides much flexibility in terms of concurrency control.

Kernel Callbacks

Lastly, kernel mode senders can register a good old callback with NtSetInformationAlpcPort.

The callback is invoked whenever a message is received.

Attributes

The other main benefit ALPC has over LPC(other than asynchronousity) is the ability to securely and consistently transport certain types of data.

ALPC supports a handful of types, with Handle and View being the most prevalent.

In LPC days, if a client wants to share a handle with a server, the client would have to send a LPC message with the numerical value of the handle, and the server would duplicate it after receiving.

This is quite messy and insecure for many reasons.

First of all there’s no consistent way to verify the type of object the handle is pointing to, thus leading to the possibility of type confusion bugs.

Each server and client had to implement their own protocol and logic, which is a big red cross in secure programming.

Even if server was able to verify the type, a malicious client could race the server by closing the handle and opening multiple handles to other objects before the server duplicates the numerical value, achieving the same outcome.

It’s near impossible to achieve security if each client has full and only control over the acquisition and release of the handle.

ALPC mitigates this by the use of attributes, which are just special types of data that’s transported with the help of the kernel.

Handle Case Study

If the client wants to securely send a handle to the server under ALPC, the client fills in the ALPC_MESSAGE_HANDLE_INFORMATION structure

1 | typedef struct _ALPC_MESSAGE_HANDLE_INFORMATION |

and enables the sending of handle information.

1 | msgAttr->ValidAttributes |= ALPC_MESSAGE_HANDLE_ATTRIBUTE |

Then the client uses NtAlpcSendWaitReceivePort to send the attribute over in the 4th argument SendMessageAttributes.

The kernel now verifies that the handle is present and pointing to the correct object type, and makes a kernel copy of the handle if the checks pass.

This ensures that there will still be a reference to the original object even if the client closes its handle.

When the message reaches the server, the server has to explicitly request to receive a handle in the ReceiveMessageAttributes argument.

The kernel verifies if the type of handle the server expects matches the type of handle that’s sent over, and adds the handle to the server process’s handle table if the checks pass.

At this point, the handle transportation is completed, and the kernel releases its kernel copy of the handle.

The above code snippet won’t make sense for now, but it will after the code review section.

Code Review

This section aims to review the code for a dummy ALPC server and client.

Full source code can be found on my github.

Scenario

Implement an ALPC server that checks for a secret during the connect request, and rejects the request if the secret is not satisfied. If the connection is successful, the server will expect a file handle from the client and log a message to the file.

Implement an ALPC client that passes the secret check, opens a file handle and shares it with the server.

Setup

We’ll need definitions of structures and constants that ALPC uses.

Process Hacker’s source code provides a header file called ntalpcapi.h which is pretty neat, but it contains some outdated structures for 64bit Windows.

I’ve updated it and uploaded the header file here.

Server Component

Let’s start with the server.

The server first has to create a connection port.

1 | ALPC_PORT_ATTRIBUTES alpcAttr = { 0 }; |

As mentioned above, the kernel verifies the type of handle sent to the receiver, so we have to explicitly allow duplication of file handles.

After creating the port, the server has to listen for incoming connection requests.

1 | PORT_MESSAGE64 *msgBuffer = NULL; |

First a message buffer is allocated to hold 64KB of possible data as well as the PORT_MESSAGE header.

Then we cap the request size at sizeof(SECRET) + sizeof(PORT_MESSAGE64) and listen for incoming connection requests.

The server can check if a request is a connection request by doing msgBuffer->u2.s2.Type & 0xfff == LPC_CONNECTION_REQUEST.

The code above blocks until a message request comes in.

If the check passes, the server accepts the connection.

1 | PORT_MESSAGE64 acceptRequest = { 0 }; |

The server accepts the connection by sending back a PORT_MESSAGE header with the same MessageId as the request message.

It can optionally also send back data for the client to consume if the client is listening.

The last argument of NtAlpcAcceptConnectPort determines whether the connection is accepted or declined.

Assuming the connection is accepted, the server now has to initialize an attribute structure to hold the incoming handle.

1 | ALPC_MESSAGE_ATTRIBUTES *msgAttr = NULL; |

The allocation function first gets the desired size to allocate based on the attributes to allocate.

The server can pass in one or more attributes ORed together, and they will all be allocated at once, contiguously in memory.

For our example, we only allocate for the handle attribute, so in memory it will be the ALPC_MESSAGE_ATTRIBUTES buffer followed by the ALPC_MESSAGE_HANDLE_INFORMATION buffer.AlpcInitializeMessageAttribute sets the msgAttr->AllocatedAttributes member.

Now we can proceed to the main server loop to listen for messages from the connected client.

1 | ALPC_MESSAGE_HANDLE_INFORMATION *handleAttr = NULL; |

The server again listens for the maximum buffer size allowed via the Double Buffer mechanism, but also listens for an attribute.

Upon receiving a message, the server can check if a handle attribute exists by checking the msgAttr->ValidAttributes member.

If a handle attribute does exist, AlpcGetMessageAttribute helps us do the arithmetic to get to the ALPC_MESSAGE_HANDLE_INFORMATION buffer in memory, where we can extract the handle.

The server can do whatever it wants with the handle now, but more importantly it must remember to close it after use.

1 | /* It's now our responsibility to close this handle */ |

Client Component

The client component also starts by initializing a message buffer, as well as writing the secret to it.

1 | HANDLE hFile = INVALID_HANDLE_VALUE; |

It also allocates a buffer to receive incoming messages from the server.

Not really required for our use case, but ALPC doesn’t like not having one.

Next the client attempts a connection

1 | NTSTATUS status = STATUS_SUCCESS; |

The ALPC_MSGFLG_SYNC_REQUEST flag is passed to request for a blocking connection, and a client communication port handle is returned if successful.

Upon establishing a connection to the server, the client can now share its handle.

1 | ALPC_MESSAGE_ATTRIBUTES *msgAttributes = NULL; |

The client allocates the attribute buffers the same way as the server did, and indicates to ALPC that it’s going to send over a handle by toggling the msgAttributes->ValidAttributes member.

Finally, it fills in the handle to send and specifies that the handle is to be duplicated with the exact same access on the receiver’s end.

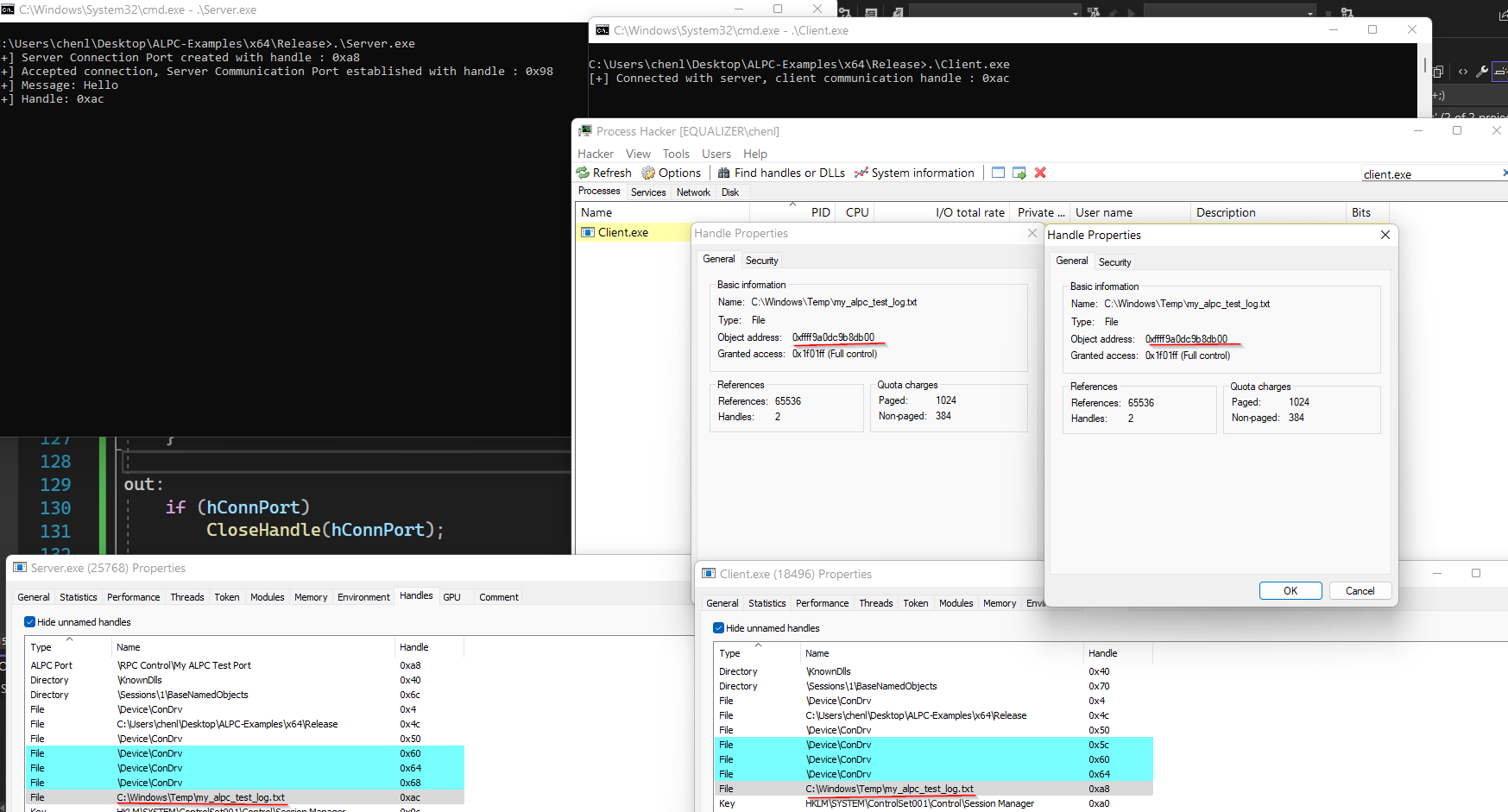

Demo

As shown above, both the client and server process possesses a handle to the same object in kernel memory, with the same access.

Even though the client opened the handle without specifying any sharing mode, it is still able to share the handle with another process through ALPC.

But Why?

If you’re thinking “yo what’s the point of practising writing ALPC servers and clients, it’s undocumented anyways and you’ll never have to write one besides those already present in the real world“, you’ll have to re think.

Besides writing clients to pwn badly written windows server components, you might get a chance to perform name squatting and get a privileged client to connect to you.

Generic ALPC Bug Class

In his talk, Alex Ionescu mentioned a generic bug that can occur in arbitrary ALPC server implementations due to the sheer complexity of ALPC resource management. (around 32 minutes)

Basically when a sender shares a view attribute with a receiver and the receiver accepts, the view is mapped in the receiver’s address space.

At this point it’s up to the receiver to free this address space when it’s no longer required.

In order to mark the memory as free, the receiver can either:

- Set

ALPC_VIEWFLG_UNMAP_EXISTINGin the view attribute - Manually call

NtAlpcFreeSectionView

However, this merely marks the memory to be recycled, but ALPC will not actually recycle it until the receiver replies to the message, thereby completing the processing of this message and its memory.

Even for datagram requests where no actual reply is sent out, the server still has to “reply” for the memory to be freed.

Failure to do so results in the memory still resident, and a malicious client can flood the server with views to cause a DoS.

The same can be said about handles, where it’s the receiver’s responsibility to close them.

Takeaway: If you’re too lazy to do a code audit, just try to spam views at the target server and use process hacker to check.

Exercise

Armed with some ALPC knowledge and ntalpcapi.h, it’s time to reverse engineer a real ALPC server interface.

- Download

WerSvc.dllpatchKB4501371from Winbindex here. - Starting from

CWerService::_StartLpcServer, reverse engineer the ALPC server implementation until you find the switch statements to perform various Windows Error Reporting tasks. - Write client code to trigger the

CWerService::SvcCollectMemoryInfotask.

Bonus

Audit the CWerService::SvcCollectMemoryInfo task implementation and try to rediscover CVE-2019-1342

Conclusion

In this post we’ve explored the theoretical internals of ALPC, as well as enough internals to make it semi-documented and understandable while reverse engineering.

Keep in mind that ALPC is an extremely complex component, and we’ve not yet analysed how it works in the kernel level.

You can do that if you aim at finding bugs on the implementation level, or you are a third party developer writing ALPC code(I wouldn’t recommend though).

Otherwise, it’s perfectly fine to stop here if you’re just trying to audit some ALPC server interface for bugs there.

:)